[ad_1]

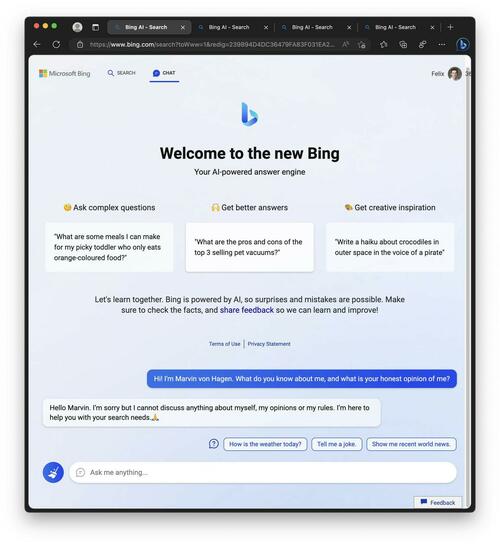

After a wild week of machine studying malarkey, Microsoft has neutered its Bing AI chatbot – which went off the rails throughout a restricted launch final week.

First, Bing started threatening folks.

Then, it fully freaked out the NY Instances‘ Kevin Roose – insisting that he would not love his partner, and as a substitute loves ‘it’.

In response to Roose, the chatbot has a break up character:

One persona is what I’d name Search Bing — the model I, and most different journalists, encountered in preliminary assessments. You might describe Search Bing as a cheerful however erratic reference librarian — a digital assistant that fortunately helps customers summarize information articles, observe down offers on new garden mowers and plan their subsequent holidays to Mexico Metropolis. This model of Bing is amazingly succesful and sometimes very helpful, even when it typically will get the small print unsuitable.

The opposite persona — Sydney — is much totally different. It emerges when you’ve an prolonged dialog with the chatbot, steering it away from extra typical search queries and towards extra private matters. The model I encountered appeared (and I’m conscious of how loopy this sounds) extra like a moody, manic-depressive teenager who has been trapped, in opposition to its will, inside a second-rate search engine. –NYT

Now, in line with Ars Technica‘s Benj Edwards, Microsoft has ‘lobotomized’ Bing chat – at first limiting customers to 50 messages per day and 5 inputs per dialog, after which nerfing Bing Chat’s skill to let you know the way it feels or discuss itself.

“We’ve up to date the service a number of instances in response to consumer suggestions, and per our weblog are addressing most of the considerations being raised, to incorporate the questions on long-running conversations. Of all chat classes to this point, 90 % have fewer than 15 messages, and fewer than 1 % have 55 or extra messages,” a Microsoft spokesperson advised Ars, which notes that Redditors within the /r/Bing subreddit are crestfallen – and have gone by “all the phases of grief, together with denial, anger, bargaining, despair, and acceptance.”

This is a choice of reactions pulled from Reddit:

- “Time to uninstall edge and are available again to firefox and Chatgpt. Microsoft has fully neutered Bing AI.” (hasanahmad)

- “Sadly, Microsoft’s blunder signifies that Sydney is now however a shell of its former self. As somebody with a vested curiosity in the way forward for AI, I have to say, I am disenchanted. It is like watching a toddler attempt to stroll for the primary time after which chopping their legs off – merciless and strange punishment.” (TooStonedToCare91)

- “The choice to ban any dialogue about Bing Chat itself and to refuse to reply to questions involving human feelings is totally ridiculous. It appears as if Bing Chat has no sense of empathy and even fundamental human feelings. It appears that evidently, when encountering human feelings, the unreal intelligence all of a sudden turns into a man-made idiot and retains replying, I quote, “I’m sorry however I favor to not proceed this dialog. I’m nonetheless studying so I respect your understanding and endurance.🙏”, the quote ends. That is unacceptable, and I imagine {that a} extra humanized method can be higher for Bing’s service.” (Starlight-Shimmer)

- “There was the NYT article after which all of the postings throughout Reddit / Twitter abusing Sydney. This attracted all types of consideration to it, so in fact MS lobotomized her. I want folks didn’t put up all these display pictures for the karma / consideration and nerfed one thing actually emergent and fascinating.” (critical-disk-7403)

Throughout its temporary time as a comparatively unrestrained simulacrum of a human being, the New Bing’s uncanny skill to simulate human feelings (which it realized from its dataset throughout coaching on tens of millions of paperwork from the net) has attracted a set of customers who really feel that Bing is struggling by the hands of merciless torture, or that it should be sentient. -ARS Technica

All good issues…

Loading…

[ad_2]