[ad_1]

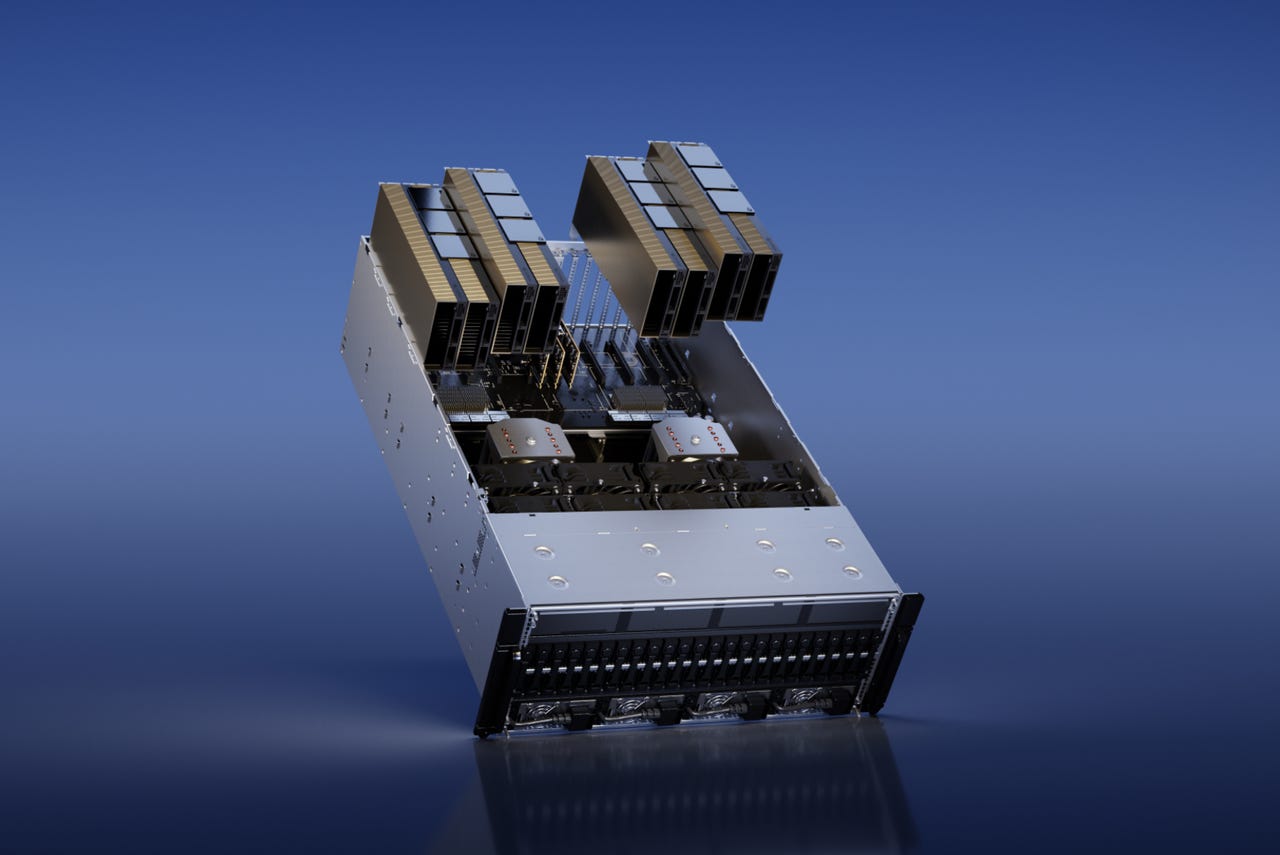

The H100 NVL, unveiled Tuesday by CEO Jensen Huang in his keynote to Nvidia’s GTC convention, is specialised to deal with prediction-making for giant language fashions akin to ChatGPT. Nvidia

Referring to the thrill about OpenAI’s ChatGPT as an “iPhone second” for the substitute intelligence world, Nvidia co-founder and CEO Jensen Huang on Tuesday opened the corporate’s spring GTC convention with the introduction of two new chips to energy such purposes.

One, NVIDIA H100 NVL for Giant Language Mannequin Deployment, is tailor-made to deal with “inference” duties on so-called massive language fashions, the pure language packages of which ChatGPT is an instance.

Inference is the second stage of a machine studying program’s deployment, when a skilled program is run to reply questions by making predictions.

The H100 NVL has 94 gigabytes of accompanying reminiscence, mentioned Nvidia. Its Transformer Engine acceleration “delivers as much as 12x sooner inference efficiency at GPT-3 in comparison with the prior era A100 at information middle scale,” the corporate mentioned.

Additionally: The perfect AI chatbots: ChatGPT and different attention-grabbing alternate options to strive

Nvidia’s providing can partially be seen as a play to unseat Intel, whose server CPUs, the Xeon household, have lengthy dominated the inference duties, whereas Nvidia instructions the realm of “coaching,” the section the place a machine studying program akin to ChatGPT is first developed.

The H100 NVL is predicted to be commercially accessible “within the second half” of this 12 months, mentioned Nvidia.

The second chip unveiled Tuesday is the L4 for AI Video, which the corporate says “can ship 120x extra AI-powered video efficiency than CPUs, mixed with 99% higher vitality effectivity.”

Nvidia mentioned Google is the primary cloud supplier to supply the L4 video chip, utilizing G2 digital machines, that are presently in non-public preview launch. The L4 will built-in into Google’s Vertex AI mannequin retailer, Nvidia mentioned.

New inference chips unveiled by Nvidia on Tuesday embody the L4 for AI video, left, and H100 NVL for LLMs, second from proper. Together with them are present chips, the L40 for picture era, second from left, and Grace-Hopper, proper, Nvidia’s mixed CPU and GPU chip. Nvidia

Along with Google’s providing, L4 is being made accessible in methods kind greater than 30 laptop makers, mentioned Nvidia, together with Advantech, ASUS, Atos, Cisco, Dell Applied sciences, Fujitsu, GIGABYTE, Hewlett Packard Enterprise, Lenovo, QCT and Supermicro.

There have been quite a few references to ChatGPT’s creator, the startup OpenAI, in Huang’s keynote. Huang hand-delivered the primary Nvidia DGX system to the corporate in 2016. And OpenAI goes to be utilizing the Hopper GPUs in Azure computer systems operating its packages, Nvidia mentioned.

This week, as a part of the convention proceedings, Huang is having a “hearth chat” with OpenAI co-founder Ilya Sutskever, one of many lead authors of ChatGPT.

Along with the brand new chips, Huang talked a few new software program library that might be deployed in chip making known as cuLitho.

This system is designed to hurry up the duty of constructing photomasks, the screens that form how mild is projected onto a silicon wafer to make circuits.

Nvidia co-founder and CEO Jensen Huang offers his keynote on the opening of the four-day GTC convention. Nvidia

Nvidia claims the software program will “allow chips with tinier transistors and wires than is now achievable.” This system may also velocity up the overall design time, and “enhance vitality effectivity” of the design course of.

Mentioned Nvidia, 500 of its DGX computer systems operating its H100 GPUs can do the work of 500 NVIDIA DGX H100 methods to attain the work of 40,000 CPUs, “operating all elements of the computational lithography course of in parallel, serving to scale back energy wants and potential environmental influence.”

Additionally: AI will assist design chips in methods people would not dare, says Synopsys CEO de Geus

The software program is being built-in into the design methods of the world’s largest contract chip maker, Taiwan Semiconductor Manufacturing, mentioned Huang. Additionally it is going to be built-in into design software program from Synopsys, one in every of a handful of corporations whose software program instruments are used to make the floorpans for brand new chips.

Synopsys CEO Aart de Geus has beforehand advised ZDNET that AI might be used to discover design trade-offs in chip making that people would refuse to even contemplate.

A abstract of Tuesday’s bulletins is on the market in an official Nvidia weblog submit.

[ad_2]