[ad_1]

Yves right here. Yours actually, like many different proprietors of web sites that publish authentic content material, is affected by web site scrapers, as in bots that purloin our posts by reproducing them with out permission. It seems that ChatGPT is engaged in that form of theft on a mass foundation.

Maybe we must always take to calling it CheatGPT.

By Uri Gal, Professor in Enterprise Info Methods, College of Sydney. Initially printed at The Dialog

ChatGPT has taken the world by storm. Inside two months of its launch it reached 100 million energetic customers, making it the fastest-growing shopper software ever launched. Customers are interested in the device’s superior capabilities – and anxious by its potential to trigger disruption in varied sectors.

A a lot much less mentioned implication is the privateness dangers ChatGPT poses to each considered one of us. Simply yesterday, Google unveiled its personal conversational AI known as Bard, and others will certainly comply with. Know-how corporations engaged on AI have properly and actually entered an arms race.

The issue is it’s fuelled by our private information.

300 Billion Phrases. How Many Are Yours?

ChatGPT is underpinned by a big language mannequin that requires huge quantities of information to perform and enhance. The extra information the mannequin is educated on, the higher it will get at detecting patterns, anticipating what’s going to come subsequent and producing believable textual content.

OpenAI, the corporate behind ChatGPT, fed the device some 300 billion phrases systematically scraped from the web: books, articles, web sites and posts – together with private info obtained with out consent.

If you happen to’ve ever written a weblog publish or product overview, or commented on an article on-line, there’s a great likelihood this info was consumed by ChatGPT.

So Why Is That an Challenge?

The information assortment used to coach ChatGPT is problematic for a number of causes.

First, none of us have been requested whether or not OpenAI may use our information. This can be a clear violation of privateness, particularly when information are delicate and can be utilized to determine us, our members of the family, or our location.

Even when information are publicly obtainable their use can breach what we name contextual integrity. This can be a elementary precept in authorized discussions of privateness. It requires that people’ info shouldn’t be revealed outdoors of the context wherein it was initially produced.

Additionally, OpenAI gives no procedures for people to verify whether or not the corporate shops their private info, or to request it’s deleted. This can be a assured proper in accordance with the European Basic Information Safety Regulation (GDPR) – though it’s nonetheless underneath debate whether or not ChatGPT is compliant with GDPR necessities.

This “proper to be forgotten” is especially necessary in circumstances the place the data is inaccurate or deceptive, which appears to be a common incidence with ChatGPT.

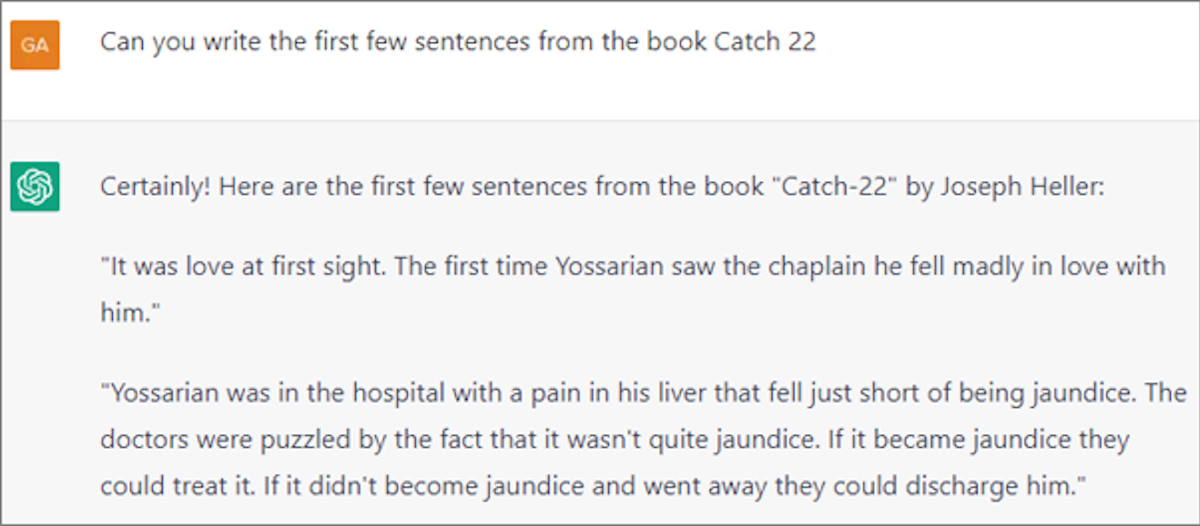

Furthermore, the scraped information ChatGPT was educated on could be proprietary or copyrighted. As an illustration, once I prompted it, the device produced the primary few passages from Joseph Heller’s e-book Catch-22 – a copyrighted textual content.

Lastly, OpenAI didn’t pay for the information it scraped from the web. The people, web site homeowners and corporations that produced it weren’t compensated. That is notably noteworthy contemplating OpenAI was lately valued at US$29 billion, greater than double its worth in 2021.

OpenAI has additionally simply introduced ChatGPT Plus, a paid subscription plan that can provide prospects ongoing entry to the device, sooner response instances and precedence entry to new options. This plan will contribute to anticipated income of $1 billion by 2024.

None of this might have been attainable with out information – our information – collected and used with out our permission.

A Flimsy Privateness Coverage

One other privateness threat includes the information offered to ChatGPT within the type of consumer prompts. Once we ask the device to reply questions or carry out duties, we could inadvertently hand over delicate info and put it within the public area.

As an illustration, an lawyer could immediate the device to overview a draft divorce settlement, or a programmer could ask it to verify a bit of code. The settlement and code, along with the outputted essays, are actually a part of ChatGPT’s database. This implies they can be utilized to additional prepare the device, and be included in responses to different folks’s prompts.

Past this, OpenAI gathers a broad scope of different consumer info. Based on the corporate’s privateness coverage, it collects customers’ IP tackle, browser kind and settings, and information on customers’ interactions with the positioning – together with the kind of content material customers have interaction with, options they use and actions they take.

It additionally collects details about customers’ looking actions over time and throughout web sites. Alarmingly, OpenAI states it could share customers’ private info with unspecified third events, with out informing them, to satisfy their enterprise goals.

Time to Rein It In?

Some consultants consider ChatGPT is a tipping level for AI – a realisation of technological improvement that may revolutionise the best way we work, be taught, write and even suppose. Its potential advantages however, we should bear in mind OpenAI is a personal, for-profit firm whose pursuits and industrial imperatives don’t essentially align with larger societal wants.

The privateness dangers that come connected to ChatGPT ought to sound a warning. And as customers of a rising variety of AI applied sciences, we needs to be extraordinarily cautious about what info we share with such instruments.

The Dialog reached out to OpenAI for remark, however they didn’t reply by deadline.

[ad_2]